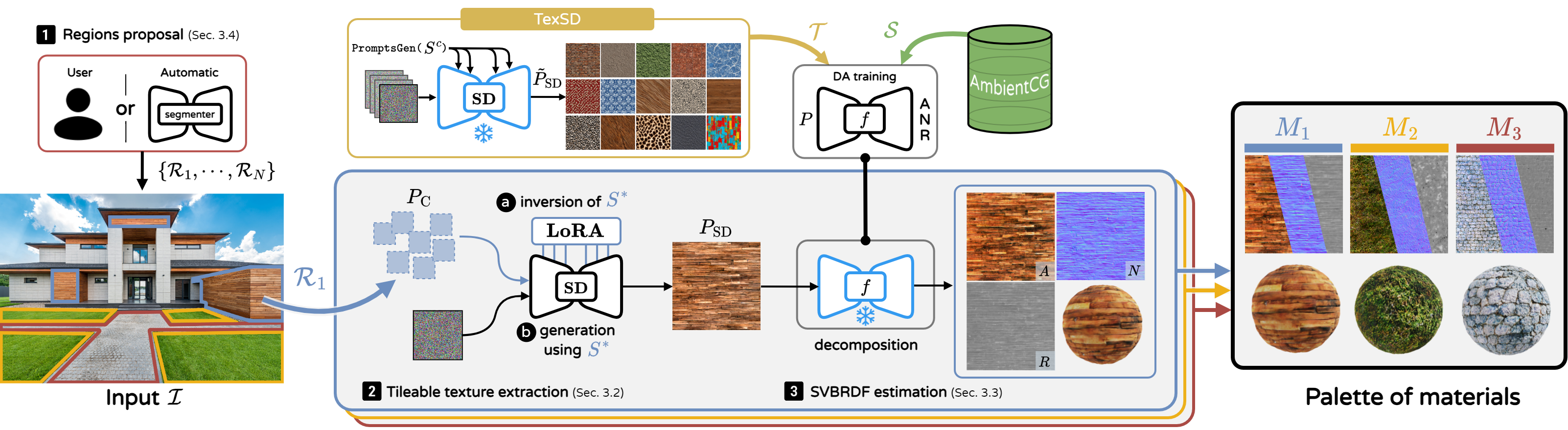

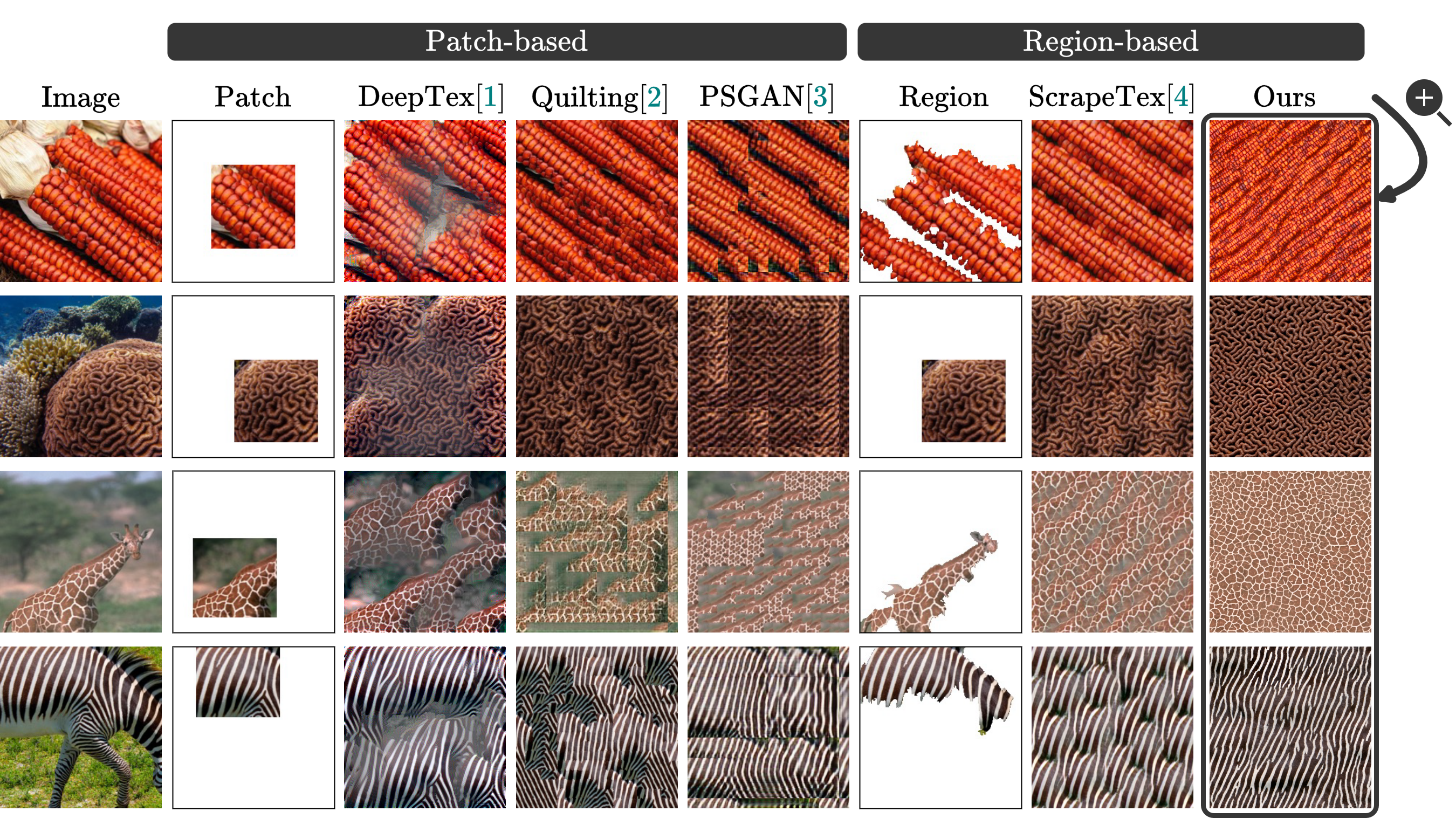

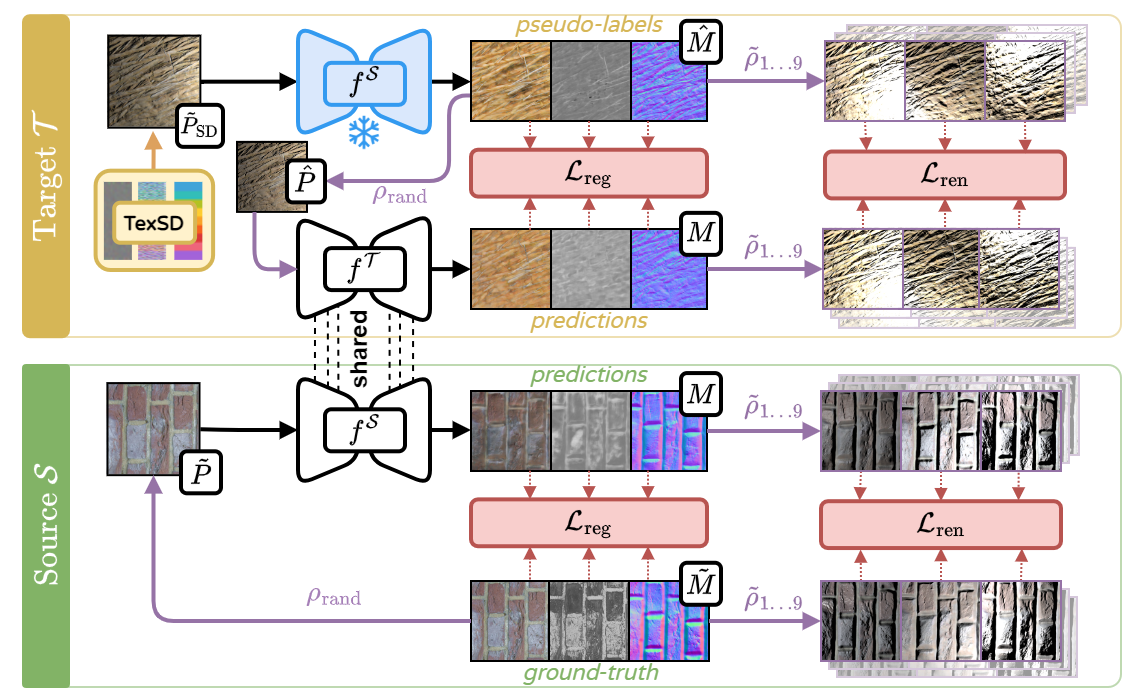

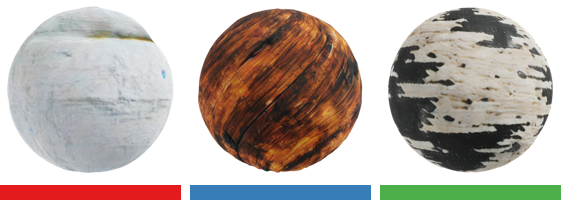

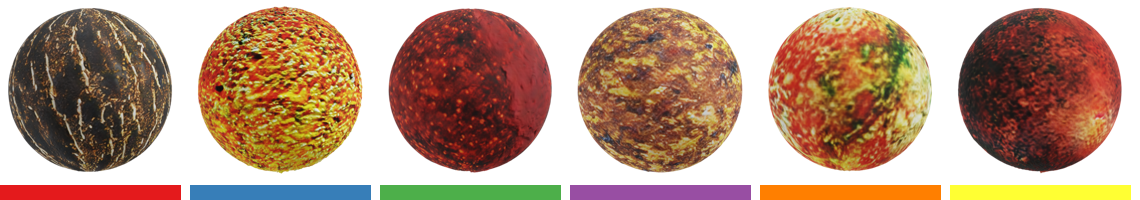

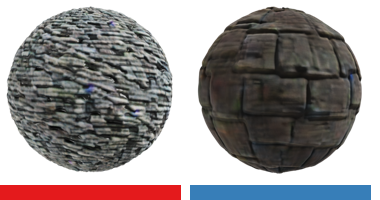

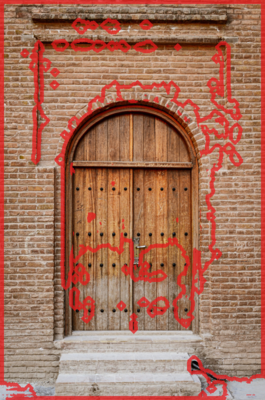

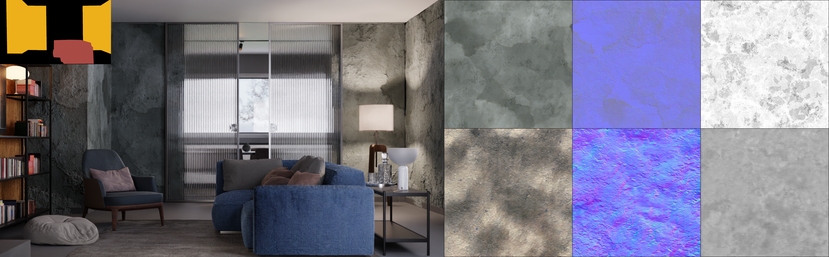

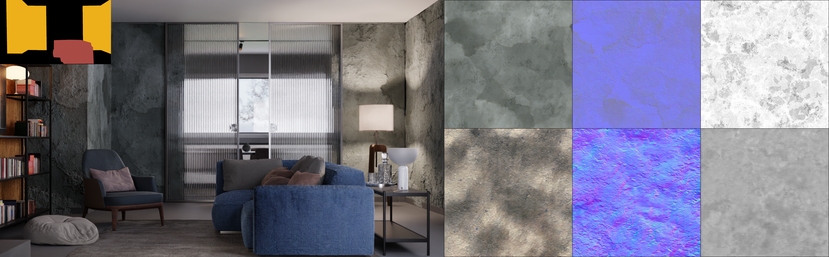

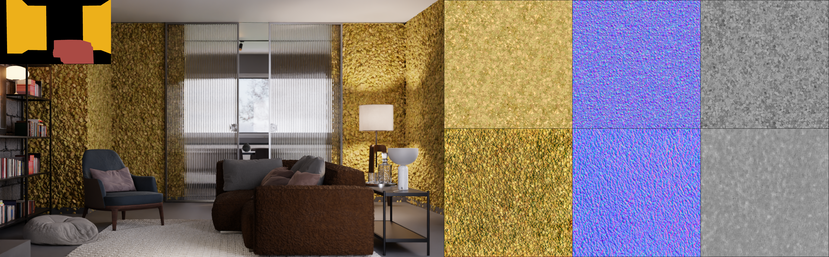

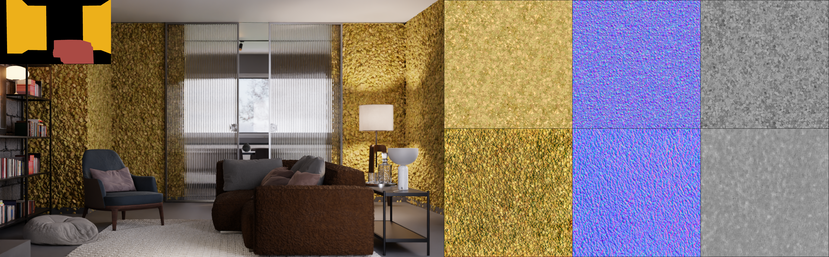

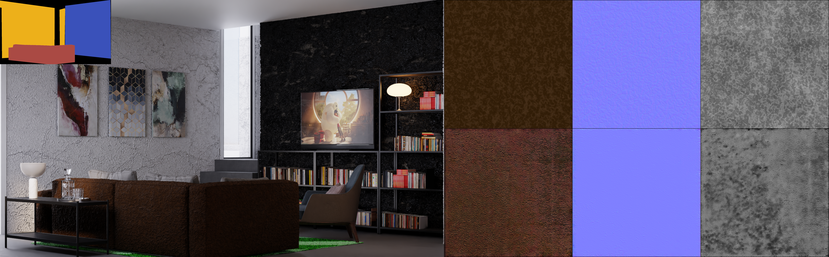

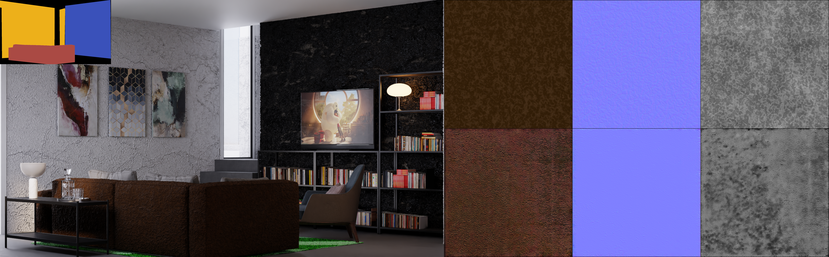

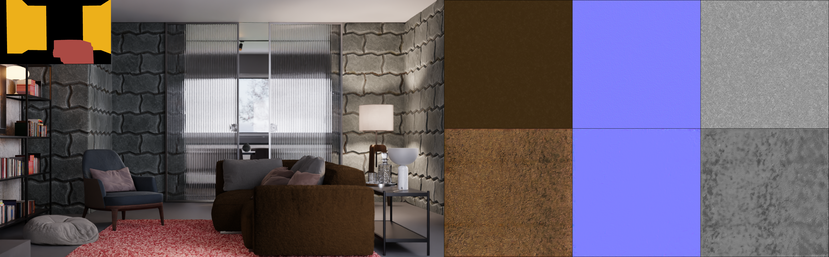

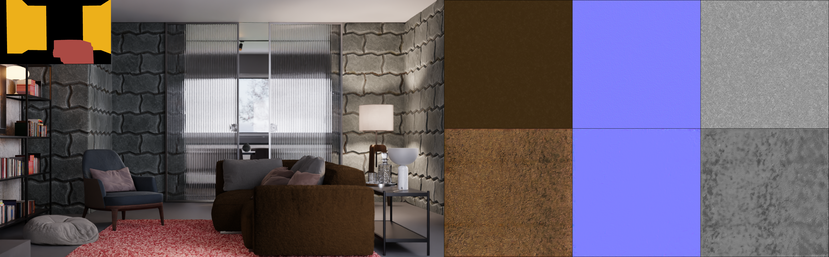

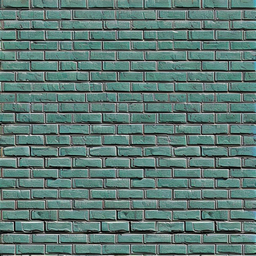

In this paper, we propose a method to extract Physically-Based-Rendering (PBR) materials from a single real-world image. We do so in two steps: first, we map regions of the image to material concepts using a diffusion model, which allows the sampling of texture images resembling each material in the scene. Second, we benefit from a separate network to decompose the generated textures into Spatially Varying BRDFs (SVBRDFs), providing us with materials ready to be used in rendering applications. Our approach builds on existing synthetic material libraries with SVBRDF ground truth, but also exploits a diffusion-generated RGB texture dataset to allow generalization to new samples using unsupervised domain adaptation (UDA). Our contributions are thoroughly evaluated on synthetic and real-world datasets. We further demonstrate the applicability of our method for editing 3D scenes with materials estimated from real photographs.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

This research project was mainly funded by the French Agence Nationale de la Recherche (ANR) as part of project SIGHT (ANR-20-CE23-0016). Results were obtained using HPC resources from GENCI-IDRIS (Grant 2023-AD011014389). Fabio Pizzati was partially funded by KAUST (Grant DFR07910).

The repository contains code taken from PEFT, SVBRDF-Estimation, DenseMTL. As for visualization, we used DeepBump and Blender. Credit to Runway for providing the Stable Diffusion v1.5 model weights. All images and 3D scenes used in this work have permissive licenses. Special credits to AmbientCG for the huge work.

We would also like to thank all members of Astra-Vision for their valuable feedback.

@inproceedings{lopes2023material,

author = {Lopes, Ivan and Pizzati, Fabio and de Charette, Raoul},

title = {Material Palette: Extraction of Materials from a Single Image},

booktitle = {CVPR},

year = {2024},

project = {https://astra-vision.github.io/MaterialPalette/}

}